single node configuration hadoopp-1.0.3

step 1 - ssh install

sudo apt-get update

sudo apt-get install openssh-server

ssh-keygen -t rsa -p""

ssh localhost

cat $HOME/.ssh/id_rsa.pub >> $HOME/.ssh/authorized_keys

ssh localhosst

sudo apt-get install openjdk-7-jdk

java -version

step 3 move the hadoop-1.0.3.tar.gz file ubuntu

windows D:->siva->soft->hadoop-1.0.3.tar.gz into (ubuntu home->siva )

ls

sudo tar -xvf hadoop-1.0.3.tar.gz

ls

sudo chown -R siva:siva hadoop-1.0.3

step 4 Edit -> bash.rc

open bash.rc

/home/siva/.bash.rc

if u want see the bash.rc fil presh ctrl+h

then copy blow code

.bashrc file

(

export HADOOP_HOME=/home/siva/hadoop-1.0.3

export PATH=$PATH:$HADOOP_HOME/bin

)

exec bash

step 5: edit hadoop files

core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/siva/htfstemp</value>

<description>A base for other temporary directories.</description>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:54310</value>

<description>The name of the default file system. A URI whose

scheme and authority determine the FileSystem implementation. The

uri's scheme determines the config property (fs.SCHEME.impl) naming

the FileSystem implementation class. The uri's authority is used to

determine the host, port, etc. for a filesystem.</description>

</property>

</configuration>

hadoop-env.sh

# The java implementation to use. Required.

export JAVA_HOME=/usr/lib/jvm/java-7-openjdk-amd64

mapred-site.xml

<property>

<name>mapred.job.tracker</name>

<value>localhost:54311</value>

<description>The host and port that the MapReduce job tracker runs

at. If "local", then jobs are run in-process as a single map

and reduce task.

</description>

</property>

step 6 create hdfstemp dir

sudo mkdir hdfstemp

sudo chown -R siva:siva hdfstemp

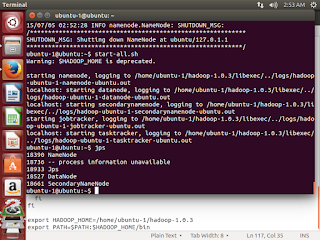

step 7 start hadoop

hadoop namenode -format

start-all.sh

step 1 - ssh install

( itcoordinates bigdata training in chennai )

sudo apt-get update

sudo apt-get install openssh-server

ssh-keygen -t rsa -p""

ssh localhost

cat $HOME/.ssh/id_rsa.pub >> $HOME/.ssh/authorized_keys

ssh localhosst

( itcoordinates bigdata training in chennai )

step 2 - java installsudo apt-get install openjdk-7-jdk

java -version

step 3 move the hadoop-1.0.3.tar.gz file ubuntu

windows D:->siva->soft->hadoop-1.0.3.tar.gz into (ubuntu home->siva )

ls

sudo tar -xvf hadoop-1.0.3.tar.gz

ls

sudo chown -R siva:siva hadoop-1.0.3

step 4 Edit -> bash.rc

( itcoordinates bigdata training in chennai )

open bash.rc

/home/siva/.bash.rc

if u want see the bash.rc fil presh ctrl+h

then copy blow code

.bashrc file

(

export HADOOP_HOME=/home/siva/hadoop-1.0.3

export PATH=$PATH:$HADOOP_HOME/bin

)

exec bash

step 5: edit hadoop files

( itcoordinates bigdata training in chennai )

core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/siva/htfstemp</value>

<description>A base for other temporary directories.</description>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:54310</value>

<description>The name of the default file system. A URI whose

scheme and authority determine the FileSystem implementation. The

uri's scheme determines the config property (fs.SCHEME.impl) naming

the FileSystem implementation class. The uri's authority is used to

determine the host, port, etc. for a filesystem.</description>

</property>

</configuration>

hadoop-env.sh

# The java implementation to use. Required.

export JAVA_HOME=/usr/lib/jvm/java-7-openjdk-amd64

mapred-site.xml

<property>

<name>mapred.job.tracker</name>

<value>localhost:54311</value>

<description>The host and port that the MapReduce job tracker runs

at. If "local", then jobs are run in-process as a single map

and reduce task.

</description>

</property>

step 6 create hdfstemp dir

( itcoordinates bigdata training in chennai )

sudo mkdir hdfstemp

sudo chown -R siva:siva hdfstemp

step 7 start hadoop

hadoop namenode -format

start-all.sh

( itcoordinates bigdata training in chennai )

( itcoordinates bigdata training in chennai )

( itcoordinates bigdata training in chennai )

( itcoordinates bigdata training in chennai )

( itcoordinates bigdata training in chennai )

( itcoordinates bigdata training in chennai )

( itcoordinates bigdata training in chennai )

( itcoordinates bigdata training in chennai )

( itcoordinates bigdata training in chennai )

IT coordinates, No 4, Second Floor, 233/2 Kutchery Road,Behind Raja Kalyana Mandapam, Opp to Dinakaran Daily Office. Mylapore, Chennai – 600 004 Mobile Number: +91-9940172669 / 9003156717 Phone Number: 044-42104495,96,98 Email: info@itcoordinates.com

Website: www.bigdatachennai.com

www.itcoordinates.com

http://itcoordinatestraining.com/

No comments:

Post a Comment